Science

The latest in science news, from the depths of space to the quantum realm.

Camera captures the world as animals see it, with up to 99% accuracy

January 25, 2024

It’s easy to forget that most animals don’t see the world the way humans do. In fact, many perceive colors that are invisible to us. But now, for the first time, scientists have found a way to capture footage as seen by animals, and it's mesmerizing.

Energy

-

World first energy storage unit demonstrates zero degradation over 5 years

April 15, 2024China's CATL – the world's largest EV battery producer – has launched TENER, which is described as the "world's first mass-producible energy storage system with zero degradation in the first five years of use." -

90-GWh thermal energy storage facility could heat a city for a year

April 09, 2024An energy supplier in Finland has announced the upcoming construction of an underground seasonal thermal energy storage facility about the size of two Madison Square Gardens that could meet the heating demands of a medium-sized city for up to a year. -

Condor-inspired retrofit boosts wind turbine energy production by 10%

April 03, 2024The Andean condor’s drag-reducing aerodynamic wings have inspired the creation of a winglet, which, when added to a wind turbine blade, boosted energy production by an average of 10%, according to a new study.

Load More

Medical

-

Cow's milk particles unlock one of medicine’s most challenging puzzles

April 26, 2024Cow’s milk contains nanoparticles that can be used to deliver RNA therapy orally, say researchers. With such drugs currently only administrable by injection, the discovery opens the door to cheaper, more accessible treatments for a range of diseases. -

Anti-aging human tests begin – and $40 million of funding is behind it

April 24, 2024California-based biopharmaceutical company Rubedo Life Sciences has announced that thanks to US$40 million in financial backing, it can commence human trials of its drug RLS-1469, designed to target the senescent cells that cause age-related disease. -

Your doctor is prescribing antibiotics that won't help – and may harm

April 23, 2024US doctors haven’t been following the rules when it comes to prescribing antibiotics, according to new research. Despite the rise in antibiotic resistance, between 2017 and 2021 more than a quarter of antibiotics prescribed were for conditions they’re ineffective against.

Load More

Space

-

Nearby asteroid's birthplace traced to specific crater on the Moon

April 26, 2024Many asteroids can be traced back to their parent body – the planet or moon they broke off from. But for the first time, scientists now claim to have traced the origins of an asteroid back to the specific crater it was birthed from. -

NASA just hacked a 1977 computer on a spacecraft way out past Pluto

April 23, 2024We have to cut the 47-year-old space veteran some slack – it's faring much better than our 2019 laptops – but Voyager 1's five months of communicating nonsense to Earth may be over, thanks to Mission Control's 15-billion-mile remote IT fix. -

World's most advanced solar sail rockets into space

April 23, 2024The world's most advanced solar sail spacecraft began its epic odyssey as it lifted off atop a Rocket Lab Electron launcher from Launch Complex 1 in Mahia, New Zealand. It was one of two payloads on the Beginning Of The Swarm mission.

Load More

Materials

-

Goldene: New 2D form of gold makes graphene look boring

April 16, 2024Graphene is the Novak Djokovic of materials – it’s so damn talented that it’s getting boring celebrating each new victory. But an exciting new upstart is challenging graphene’s title. Meet goldene, a 2D sheet of gold with its own strange properties. -

Graphite platform levitates without power

April 10, 2024Magnetic levitation is used to float things like lamps and trains, but usually it requires a power source. Now, scientists in Japan have developed a way to make a floating platform that requires no external power, out of regular old graphite. -

Harvard's bizarre "metafluid" packs programmable properties

April 09, 2024Harvard engineers have created a strange new “metafluid” – a liquid that can be programmed to change properties, like its compressibility, transparency, viscosity and even whether it’s Newtonian or not.

Load More

Biology

-

Monstrous prehistoric salmon had "tusks like a warthog"

April 25, 2024If a giant prehistoric salmon isn't scary enough for you, how about one with warthog-like tusks? According to a new study, Oncorhynchus rastrosus possessed just such appendages – even though the fish likely fed on tiny plankton. -

Self-assembling synthetic cells act like living cells with extra abilities

April 24, 2024Using DNA and proteins, scientists have created new synthetic cells that act like living cells. These cells can be reprogrammed to perform multiple functions, opening the door to new synthetic biology tech that goes beyond nature’s abilities. -

AI-designed gene editing tools successfully modify human DNA

April 24, 2024Medically, AI is helping us with everything from identifying abnormal heart rhythms before they happen to spotting skin cancer. But do we really need it to get involved with our genome? Protein-design company Profluent believes we do.

Load More

Environment

-

Soybean waste used to grow good "green" food for farmed fish

April 26, 2024Fish farming may be getting much more eco-friendly, courtesy of soybean processing wastewater. Microbes in the liquid have been used to produce proteins that could replace the fishmeal which is currently fed to farmed fish. -

Superfood protein pulled out of thin air massively scales up production

April 24, 2024It's super-sustainable, easily made and nutrient-dense. And it puts all other food production to shame. Now, the first air-protein factory is open. It's the food of the future, and soon a $100 million industry – but will you be putting it on your plate? -

45% of China's urban land is rapidly sinking due to manmade development

April 18, 2024A perfect storm is brewing for China's cities due rising sea levels and accelerated subsiding land. Scientists have now sounded the alarm that, without intervention, urban areas below sea level will triple by 2120, impacting up to 128 million people.

Load More

Physics

-

Think you understand evaporation? Think again, says MIT

April 25, 2024We all know that water evaporates when the temperature climbs, but researchers have just shown that there's another factor at play. The breakthrough could solve long-standing atmospheric mysteries and lead to future technological advances. -

Free software lets you design and test warp drives with real physics

April 16, 2024Warp drives are among the more plausible of science fiction concepts, at least from a physics perspective. Now, a group of scientists and engineers has launched open-source software that lets you design and test scientifically accurate warp drives. -

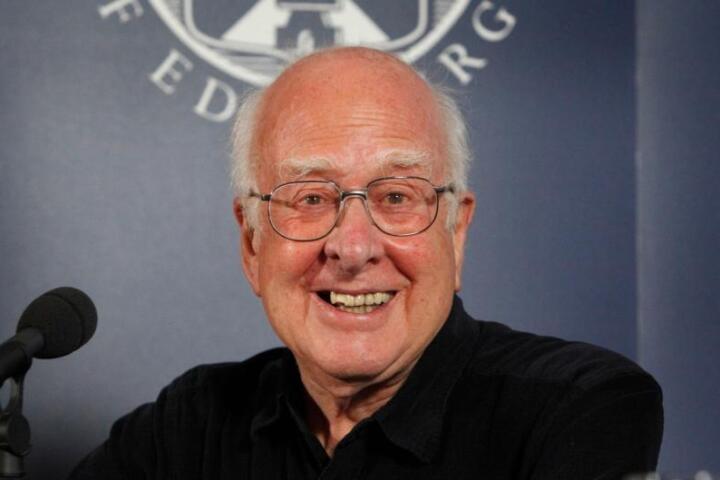

Professor Peter Higgs, renowned for Higgs boson prediction, dies aged 94

April 09, 2024Professor Peter Higgs has died aged 94. The theoretical physicist was best known for his prediction of a key elementary particle, the Higgs boson, which earned him the 2013 Nobel Prize in Physics soon after its discovery.

Load More

Electronics

-

AI synthesizer bridges technology and creativity in music composition

February 15, 2024SPIN challenges conventional notions of music creation by inviting users to collaborate with an AI language model called MusicGen. With its distinctive blend of a turntable and a drum machine, SPIN offers users a creative music composition tool. -

Eye-tracking window tech tells sightseers about what they're looking at

January 05, 2024If you're on a sightseeing tour in a bus, you really don't want to be looking away from the passing attractions to Google them on your smartphone. The AR Interactive Vehicle Display is intended to help, by showing relevant information on the vehicle's window glass. -

Diamond data storage breakthrough writes and rewrites down to single atom

December 05, 2023Diamond is a promising material for data storage, and now scientists have demonstrated a new way to cram more data onto it, down to a single atom. The technique bypasses a physical limit by writing data to the same spots in different-colored light.

Load More

Quantum Computing

-

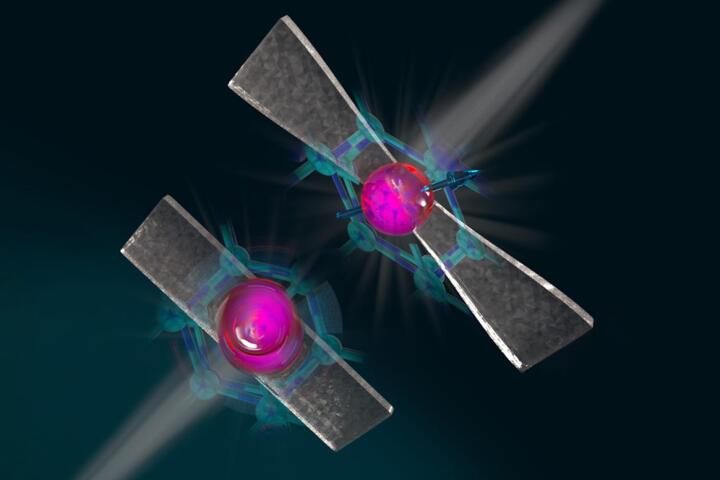

Diamond-stretching technique makes qubits more stable and controllable

November 30, 2023Researchers are claiming a breakthrough in quantum communications, thanks to a new diamond-stretching technique they say greatly increases the temperatures at which qubits remain entangled, while also making them microwave-controllable. -

Perovskite LED unlocks next-level quantum random number generation

September 05, 2023Random numbers are critical to encryption algorithms, but they're nigh-on impossible for computers to generate. Now, Swedish researchers say they've created a new, super-secure quantum random number generator using cheap perovskite LEDs. -

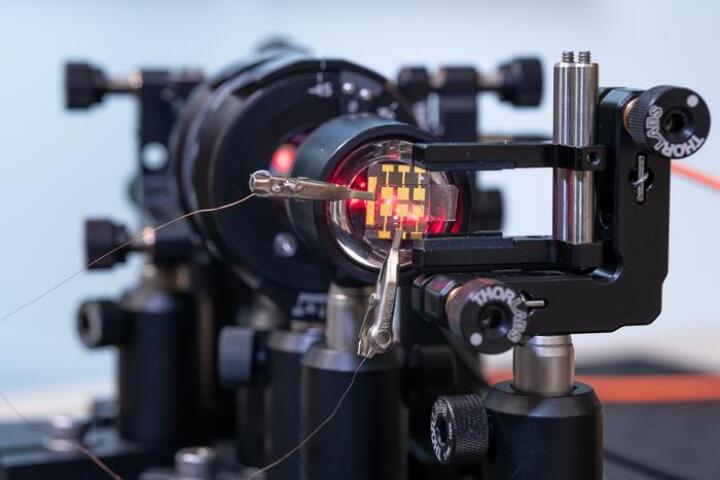

Silicon quantum computing surpasses 99% accuracy in three studies

January 19, 2022Three teams of scientists have achieved a major milestone in quantum computing. All three groups demonstrated better than 99 percent accuracy in silicon-based quantum devices, paving the way for practical, scalable, error-free quantum computers.

Load More