The Future of LifeInstitute has presented an open letter signed by over 1,000 roboticsand artificial intelligence (AI) researchers urging the UnitedNations to impose a ban on the development of weaponized AI with thecapability to target and kill without meaningful human intervention.The letter was presented at the 2015 International Conference onArtificial Intelligence (IJCAI), and is backed with the endorsementsof a number of prominent scientists and industry leaders, includingStephen Hawking, Elon Musk, Steve Wozniak, and Noam Chomsky.

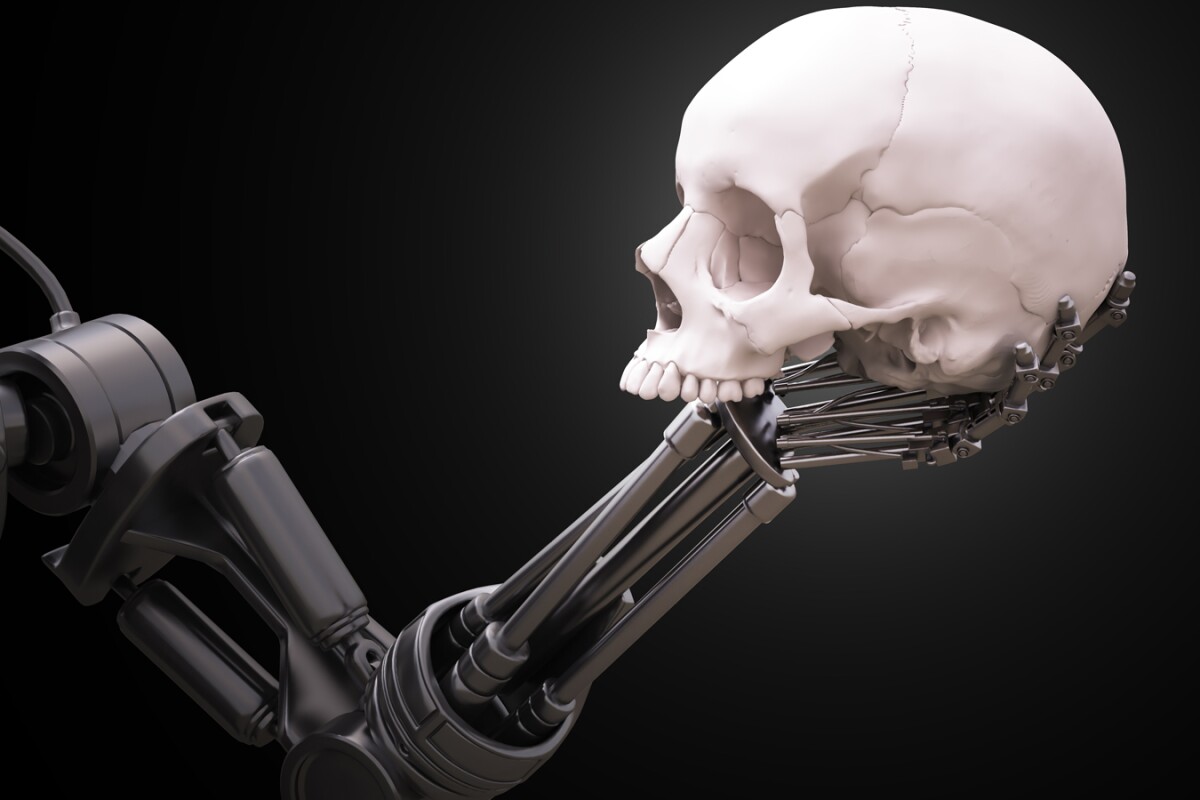

To some, armed andautonomous AI could seem a fanciful concept confined to the realm ofvideo games and sci-fi. However, the chilling warning containedwithin the newly released open letter insists that the technologywill be readily available within years, not decades, and that actionmust be taken now if we are to prevent the birth of a new paradigm ofmodern warfare.

Consider now theimplications of this. According to the open letter, many now considerweaponized AI to be the third revolution in modern warfare, aftergunpower and nuclear arms. However, for the previous two there havealways been powerful disincentives to utilize the technology. Forrifles to be used in the field, you need a soldier to wield theweapon, and this in turn meant putting a soldiers life at risk.

With the nuclearrevolution you had to consider the costly and difficult nature ofacquiring the materials and expertize required to make a bomb, notto mention the monstrous loss of life and internationalcondemnation that would inevitably follow the deployment of such aweapon and the threat of mutually assured destruction (MAD). These deterrent factors have resulted in only two bombs beingdetonated in conflict over the course of the nuclear era to date.

The true danger of anAI war machine is that it lacks these bars to conflict. AI couldreplace the need to risk a soldier's life in the field, and itsdeployment would not bring down the ire of the internationalcommunity in the same way as the launch of an ICBM. Furthermore,according to the open letter, armed AI drones with the capacity to hunt and kill persons independent of humancommand would be cheap and relatively easy to mass produce.

The technology willhave the overall effect of making a military incursion less costlyand more appealing, essentially lowering the threshold for conflict.Furthermore, taking the kill decision out of the hands of human beingdoes by its nature remove the element of human compassion and areasoning process which, at least in the foreseeable future, isunmatchable by a mere machine.

Another chilling aspectof weaponized AI tech that the letter highlights is the potential ofsuch military equipment to make its way into the hands of despots andwarlords who wouldn't think twice about deploying the machines as atool to check discontent, or even perform ethnic cleansing.

“Many of the leadingscientists in our field have put their names to this cause," says professor of Artificial Intelligence at the University of New South Wales (UNSW) and NICTA Toby Walsh. "With thisOpen Letter, we hope to bring awareness to a dire subject that,without a doubt, will have a vicious impact on the whole of mankind. We can get it right at this early stage, or we can standidly by and witness the birth of a new era of warfare. Frankly,that’s not something many of us want to see. Our call to action issimple: ban offensive autonomous weapons, and in doing so, securing asafe future for us all.”

Source: The Future ofLife Institute