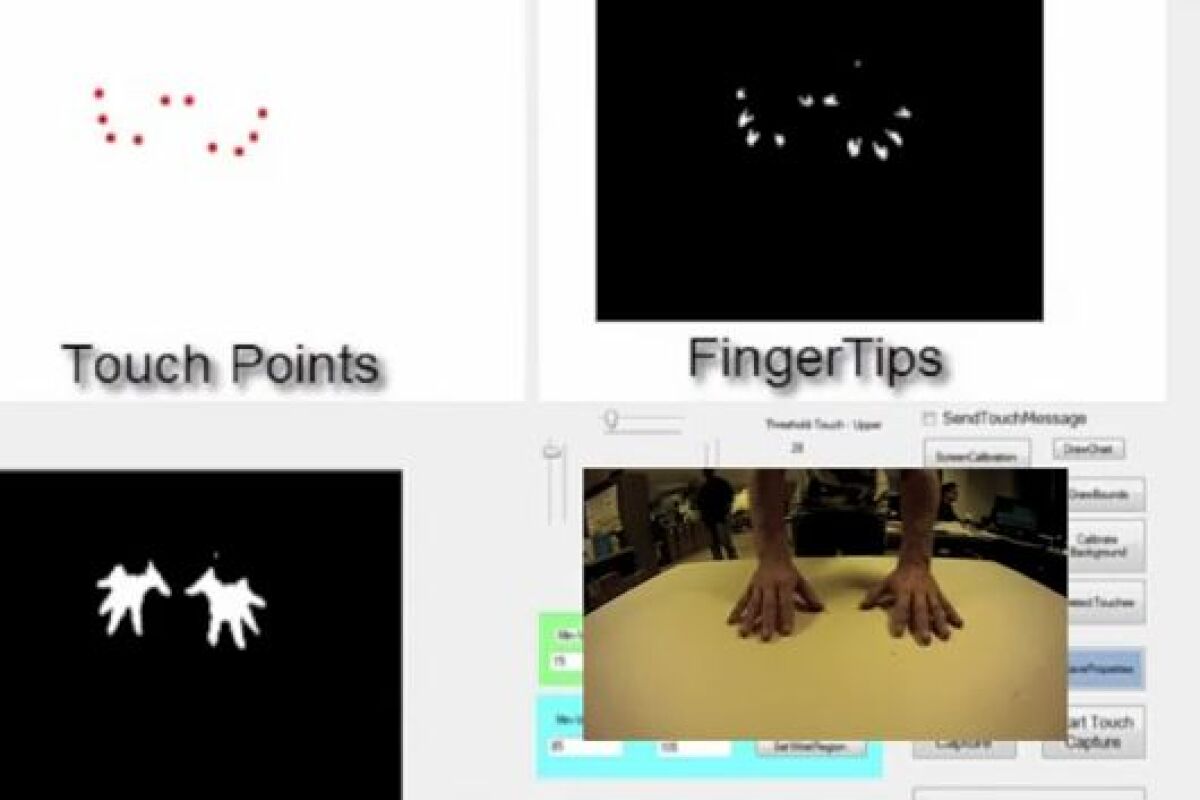

The world may not be your oyster, but thanks to technology being developed at Indiana’s Purdue University, it may soon be your multi-touch screen. Researchers at that institution have created an “extended multitouch” system, that consists of a computer, video projector, and Kinect camera – the technology allows any surface to be transformed into a touchscreen interface, that can track multiple hands simultaneously.

The system projects an image of the interface onto a flat surface such as a tabletop or wall – the material that the surface is made of is irrelevant. The depth-sensing Kinect camera is trained on that image, and is able to detect how far away from it the user’s hands are.

In order to be able to identify those hands as hands, software running on the computer incorporates a digital hand model, that allows the system to recognize an object as a hand when it “sees” one via the camera. That model can also differentiate between various parts of the hand, and between left and right hands.

“The camera sees where your hands are, which fingers you are pressing on the surface, tracks hand gestures and recognizes whether there is more than one person working at the same time,” explained Karthik Ramani, a Purdue Professor of Mechanical Engineering. “You could do precision things, like writing with a pen, with your dominant hand and more general things, such as selecting colors, using the non-dominant hand.”

So far, lab tests have shown the system to have a 98 percent rate of accuracy for determining hand posture – a key part of being able to recognize hand gestures. Newer, more precise cameras should boost that level of success even higher, allowing the extended multitouch system to see practical use.

“It might be used for any interior surface to interact virtually with a computer,” said Ramani. “You could use it for living environments, to turn appliances on, in a design studio to work on a concept or in a laboratory, where a student and instructor interact.”

UK-based Light Blue Optics has already developed a projector that turns any surface into a basic touchscreen. Purdue's multi-touch system can be seen in use in the video below.

Source: Purdue University

![The Ti EDC [everyday carry] Wrench is currently on Kickstarter](https://assets.newatlas.com/dims4/default/0ba225b/2147483647/strip/true/crop/4240x2827+0+3/resize/720x480!/quality/90/?url=http%3A%2F%2Fnewatlas-brightspot.s3.amazonaws.com%2F59%2Fb2%2F6a6fdd0348a8bfdad88bbcefec53%2Fdsc03572.jpeg)