It would be great if smartphones could sense moods – especially when they've dropped a call three times in five minutes. Engineers at the University of Rochester in Rochester, New York have developed a prototype app that provides phones with a form of emotional intelligence that could have wide applications in phones and beyond.

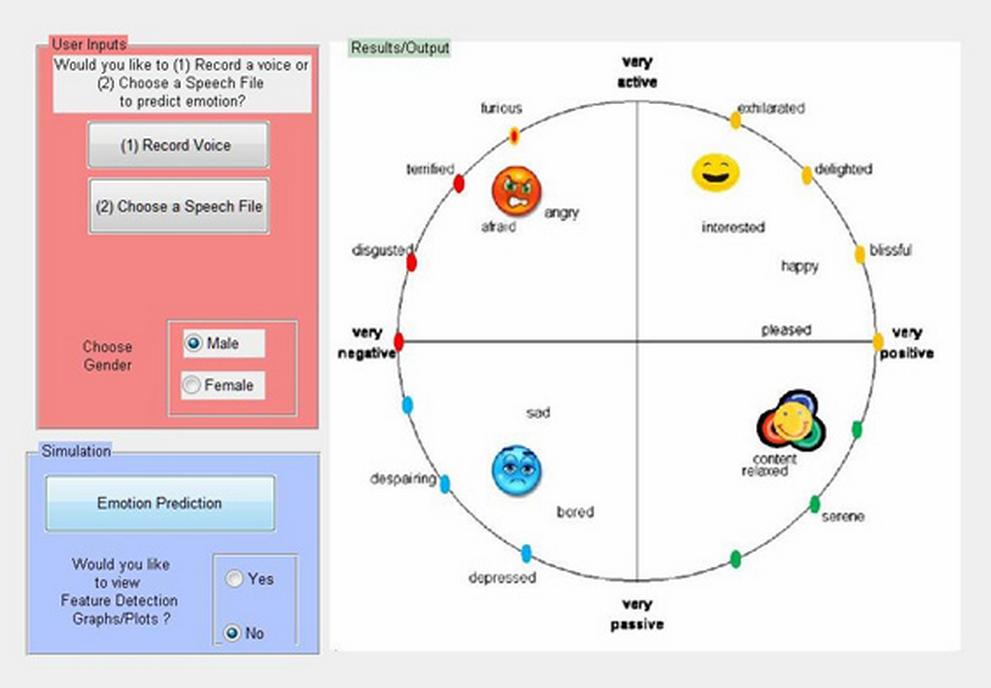

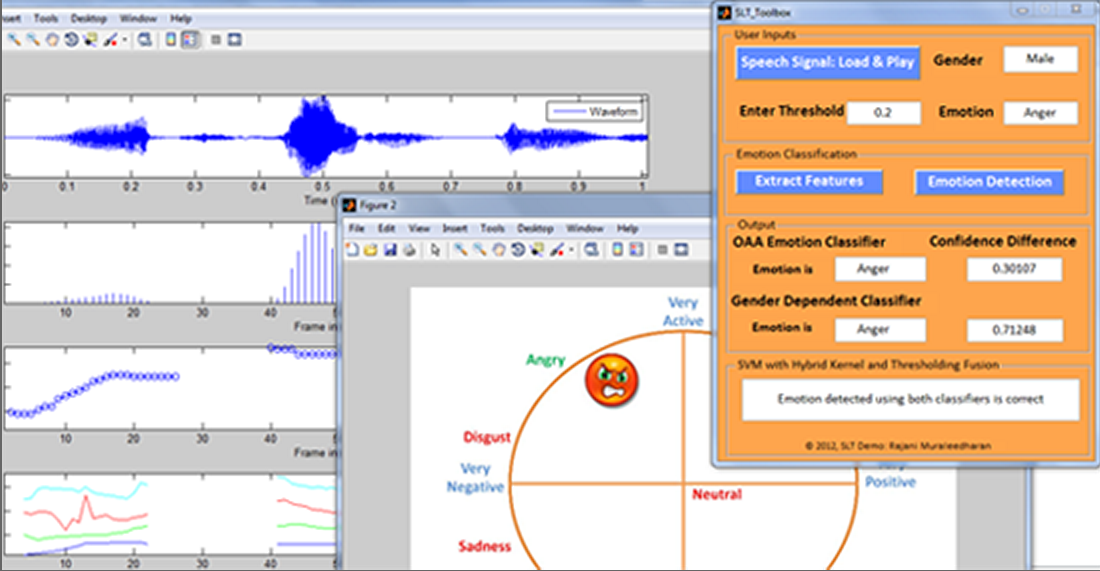

Described by the team on December 5 at the IEEE Workshop on Spoken Language Technology, Miami, Florida, the app analyzes twelve features of speech such as pitch and volume and uses this to identify one of six emotional states.

Though there are other emotion evaluating systems, the University of Rochester approach is surprisingly simple. Instead of studying a speaker’s gestures and expression or trying to figure out the meaning of words or the structure of conversation, the Rochester system deals strictly with the inherent voice cues that people use to pick up on emotion. It’s a bit like how you can figure out the mood of someone on the phone from Kenya even though you don’t speak a word of Swahili.

"We actually used recordings of actors reading out the date of the month – it really doesn't matter what they say, it's how they're saying it that we're interested in," explained Wendi Heinzelman, professor of electrical and computer engineering. The actors would read the words with various mood expression in their voices. The engineers then had the system analyze twelve features of speech and assign them with one of six emotions - "sad," "happy," "fearful," "disgusted," or "neutral." This turned out to be very accurate with a correct emotional identification 81 percent of the time as opposed to the 55 percent found in other systems.

The team has built a prototype app from the system that displays a happy or sad face depending on the speaker’s mood. "The research is still in its early days," said Heinzelman, "but it is easy to envision a more complex app that could use this technology for everything from adjusting the colors displayed on your mobile to playing music fitting to how you're feeling after recording your voice."

Another application of the technology would be for psychologists. "A reliable way of categorizing emotions could be very useful in our research," says collaborating Rochester psychologist Melissa Sturge-Apple. "It would mean that a researcher doesn't have to listen to the conversations and manually input the emotion of different people at different stages."

Currently, the technology is highly speaker dependent. Like many speech recognition systems, they work better when the system is trained for a particular person. When its used with someone else, the accuracy rate drops to 30 percent. The team is now working to train the system with voices of people in the same age group and of the same gender to try to make it more practical in real-life situations.

Source: University of Rochester